Troubleshooting

“We can not solve our problems with the same level of thinking that created them.”

― Albert Einstein

Uploading dataset fails

Your dataset file must meet the following criteria:

- The size of all dataset data files together should not exceed 100MB

- The dataset must contain columns for ID and Timestamp. For more information, see Entities.

- The first row should contain the feature/entity name

Here's an example of what a dataset file should look like, where

- Features: ׳Device, ׳Age׳, and ׳Gender׳

- Prediction: ׳is_fraud׳

- Label (ground truth): ׳is_fraudulent׳

- ID: ׳ID׳

- Timestamp: ׳Timestamp׳ (supported type: yyyy-mm-dd hh:mm:ss.SSS)

| ID | Timestamp | Device | Age | Gender | is_fraud | is_fraudulent |

|---|---|---|---|---|---|---|

| 26546 | 2022-04-11 08:50:33 | Mobile | 26 | Female | 1 | True |

| 26547 | 2022-04-11 08:51:25 | Desktop | 22 | Male | 0 | False |

Data ingestion fails

The most common use cases where data ingestion failures occur when the schema doesn't match. Make The most common cases of data ingestion failure occur when the schema don’t match. Make sure you use the same schema for data ingestion as is used in the uploaded dataset.

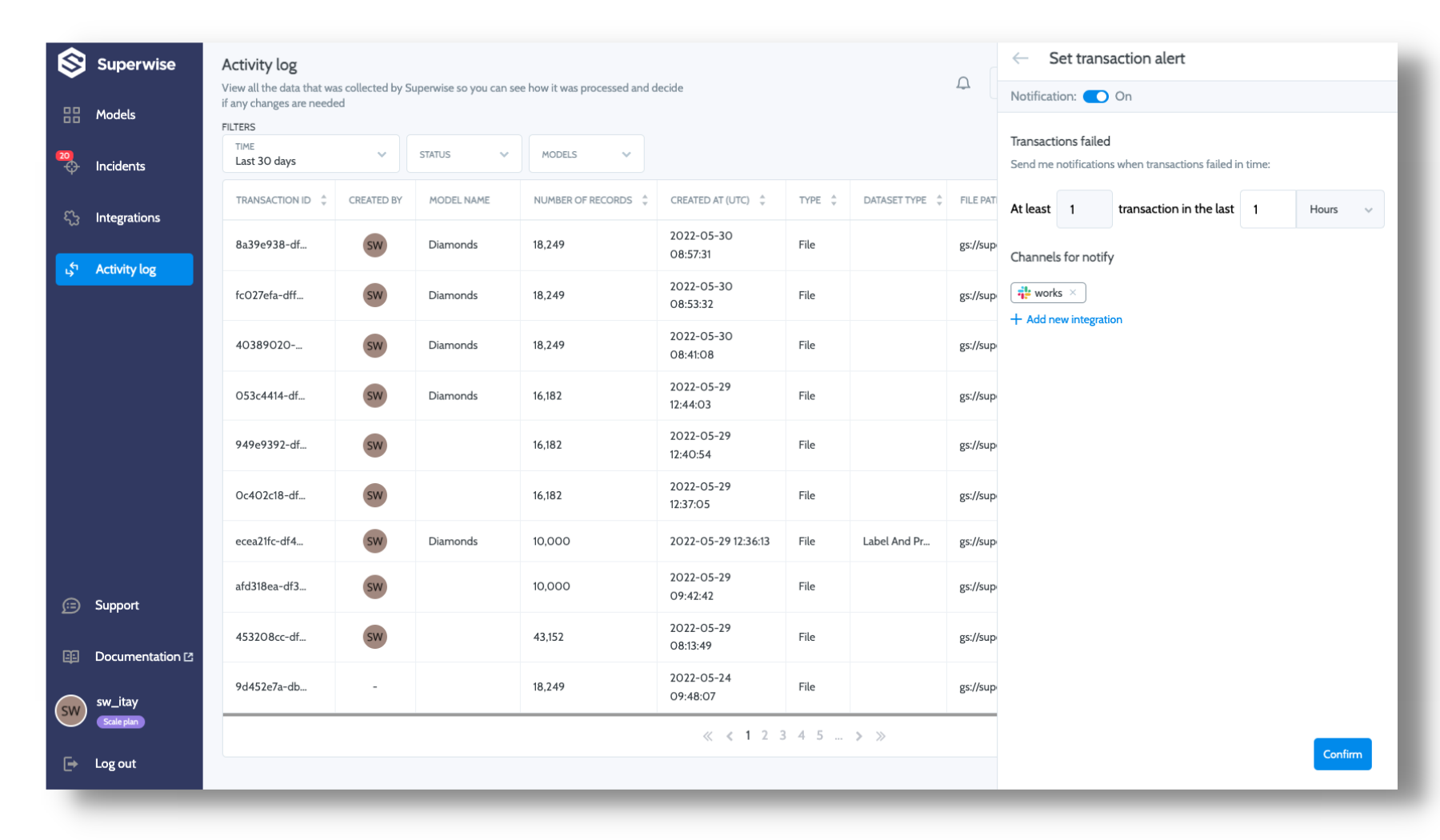

Superwise lets you track and monitor your data transactions. You can also track any failed transactions by setting an alert mechanism that will send an appropriate notification message to one of the suggested integration channels.

Configure alert mechanism

Specify how many failed transactions should occur during the selected time period in order for you to receive notification.

Pro tip

Configure the alert mechanism to match your data sending method. For example, if you send data in a stream (records), getting alerts for a certain number of failed transactions may be less relevant than when you send a data file.

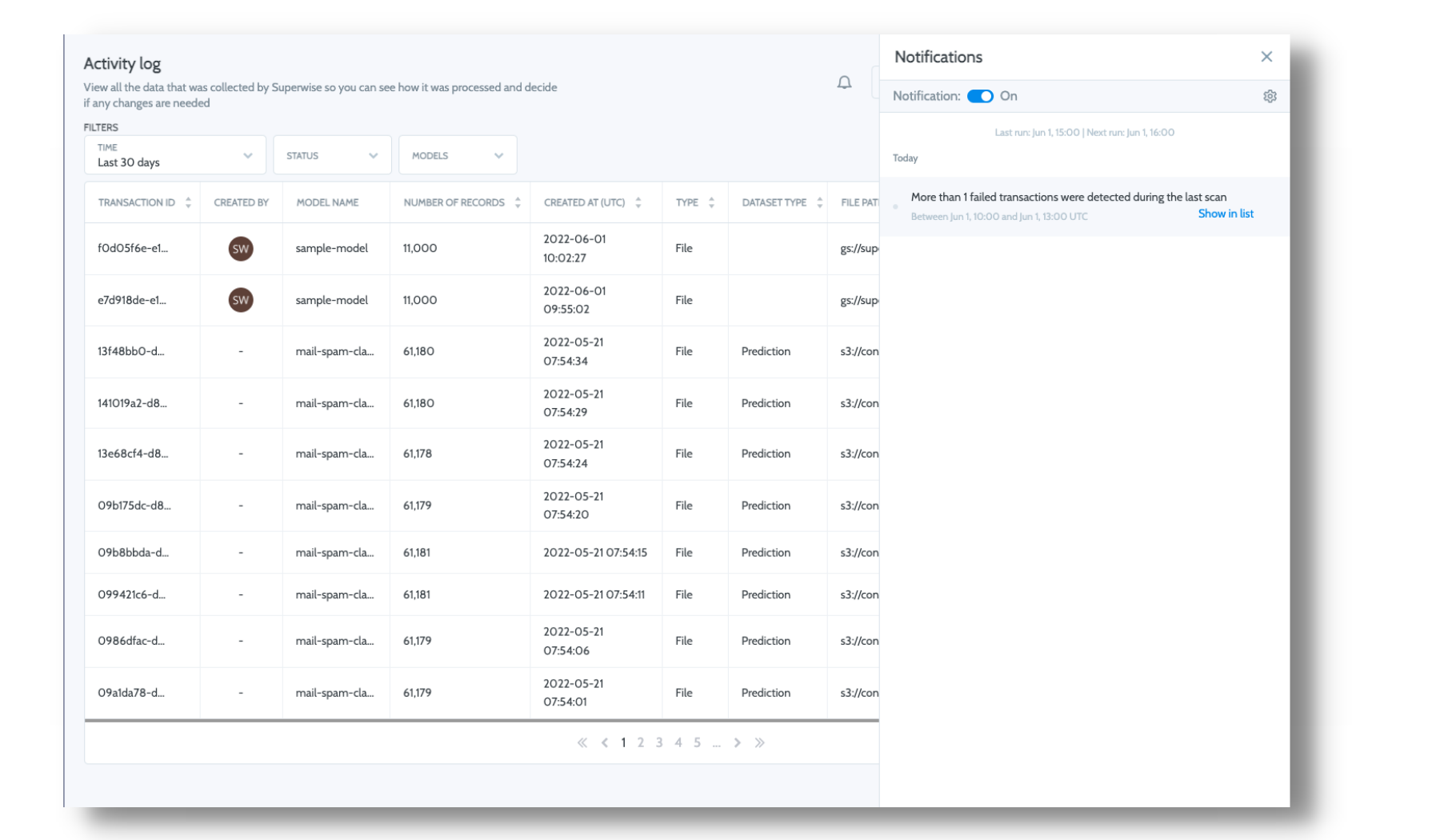

Notifications log

You can keep track of your failed transactions in the notification log. Another option is to select Show in list to filter the relevant failed transactions.

How can I see the record/prediction?

For security reasons, Superwise does not keep the raw data available. It is used only for aggregation and metric calculations.

I configured Segments but the entire segment shows 0 predictions

Analysis for segments starts from the moment you create them and are not applied retrospectively to historical data. This means that from the moment of creation until you log relevant production data into Superwise, you will see 0 as the number of predictions under that segment.

I logged production data into superwise and can't see it (quantity is zero)

There are several possible reasons for such behavior:

- The transaction may have failed. Look here to find out if this is the case.

- You logged data in batches, and it hasn't yet arrived at Superwise. It can take up to several minutes for the data to be updated in the UI, so wait a few minutes and refresh the screen.

- It might take up to several minutes for the data to be updated in the UI - wait a few minutes and refresh the screen

Updated about 2 years ago