6. Log predictions

Once you have a registered model and a specific version that you want to monitor you should start to log production serving data to monitor. Superwise provides multiple flexible ways to integrate and accommodate the needs of both stream use cases and batch use cases.

Regardless of the way you log your data, any time you log data into the Superwise platform a new transaction is created and assigned a unique transaction id.

To troubleshoot any issue while logging data into Superwise, visit the Superwise Data ingestion page and search for the specific transaction id you need to troubleshoot.

Stream logging

Your ML serving pipelines use the Superwise SDK to log prediction records.

from superwise import Superwise

sw = Superwise()

inputs['prediction'] = my_pipeline.predict(inputs)

inputs = inputs.to_dict(orient='records')

transaction_id = sw.transaction.log_records(

model_id=diamond_model.id,

version_id=new_version.id,

records=inputs,

metadata = {"KEY": "VALUE"} # OPTIONAL

)

Best practices while logging records

For efficiency, log records in batches when possible.

The maximum number of records that can be sent together is 1,000.

Once you've logged your records, you'll get the transaction id. Use this id to track the status of your data logging to ensure it passed successfully into the Superwise platform.

transaction = sw.transaction.get(transaction_id=transaction_id['transaction_id'])

print(transaction.get_properties()['status'])

Batch collector

To work with Superwise in batch mode, you should pass flat files that contain the model predictions.

The Superwise SDK supports out-of-the-box to collect files from AWS S3 and Google GCS.

Superwise supports both "CSV" and "parquet" file formats.

Here's an example of what a flat file should look like.

| Feature 1 | Feature 2 | Feature 3 |

|---|---|---|

| "ABC" | "Red" | 123 |

Whenever your files containing all required batch predictions are ready, use the SDK to submit a new batch request to collect the file and create a new transaction in the Superwise platform.

File size limitation

The sum of the size of all dataset data files together should be up to 100MB

![]() AWS S3

AWS S3

Once your production serving data is available in S3, use the following code snippet, and the SDK will collect the data and send it to the platform.

transaction_id = sw.transaction.log_from_s3(

file_path="s3://PATH",

aws_access_key_id="ACCESS-KEY",

aws_secret_access_key="SECRET-KEY",

model_id=diamond_model.id,

version_id=new_version.id,

metadata = {"KEY": "VALUE"} # OPTIONAL

)

![]() Google Cloud Storage (GCS)

Google Cloud Storage (GCS)

For GCS, use the following code snippet for the SDK to collect the data from GCS and send it to the platform.

transaction_id = sw.transaction.log_from_gcs(

file_path="gs://PATH",

service_account={"REPLACE-WITH-YOUR-SERVICE-ACCOUNT"},

model_id=diamond_model.id,

version_id=new_version.id,

metadata = {"KEY": "VALUE"} # OPTIONAL

)

Log from local file

Superwise also allows you to log data from a local file.

File settings

The file name must be unique

transaction_id = sw.transaction.log_from_local_file(

file_path="REPLACE-WITH-PATH-TO-LOCAL-FILE",

model_id=diamond_model.id,

version_id=new_version.id,

metadata = {"KEY": "VALUE"} # OPTIONAL

)

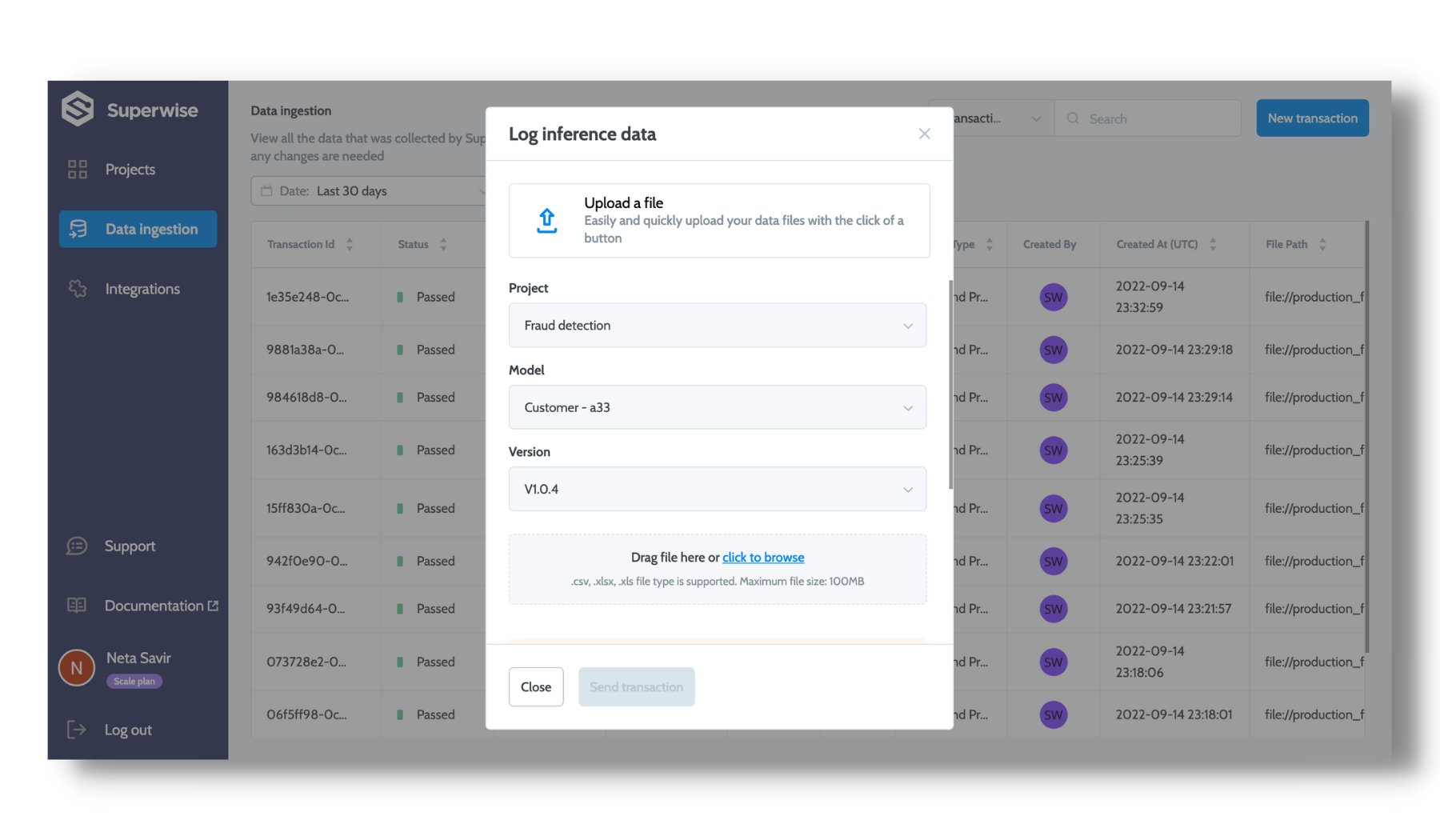

Log production data via the UI

- You can log production data from the Models screen (hovering a specific model) or from the Data ingestion page. On the Data ingestion page, just press the "Send transaction" button.

- Choose a model and a version.

- Keep your browser open while loading, otherwise it will fail

- Send transaction

Prediction file limitations

- The sum of the size of all dataset data files together should be up to 100MB

- The first row should be the feature/entity name

- Schema should match the schema's version

- No data duplications (same record ID)

Log from Azure

Superwise also allows you to log data from a local file from Azure blob storage

transaction_id = sw.transaction.log_from_azure(

azure_uri="abfs://CONTAINER/path/to/blob",

connection_string="CONNECTION-STRING",

model_id=diamond_model.id,

version_id=new_version.id

)

Log from dataframe

transaction_id = sw.transaction.log_from_dataframe(dataframe=ongoing_predictions, model_id=diamond_model.id, version_id=version.id)

Updated about 2 years ago