Configuring an LLM model

In all the various agent types that a user can develop and configure within the SUPERWISE® builder studio, a mandatory configuration is the underlying LLM model used.

SUPERWISE® comes with built-in support for a variety of common leading external LLM providers such as OpenAI, Goog AI, and more. To accommodate organizations that prefer to use privately deployed models and offer flexibility of choice, the platform also provides options to connect to other OpenAI API-compatible models.

External LLM providers

Our currently natively supported list of providers includes:

- OpenAI

- Google AI

- OpenAI Compatible

- Anthropic

Please note that to allow users to easily reuse the same model provider settings, all these settings (e.g., an OpenAI token for the OpenAI provider) are saved under the "Integration" screen and can be reused in different model settings across the tenant.

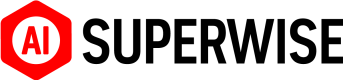

Adding an external based model via the UI

When configuring a model from the list of external providers above, the user will need to select:

- The specific model and version that the provider offers.

- Provide a legitimate working API key. Before you proceed, your API key will be tested with the configured provider to ensure it is valid.

Adding an external based model via the SDK

List current supported external providers:

from superwise_api.models.agent.agent import ModelProvider

[provider.value for provider in ModelProvider]List current supported model versions:

from superwise_api.models.agent.agent import GoogleModelVersion, OpenAIModelVersion, AnthropicModelVersion

display([model_version.value for model_version in GoogleModelVersion])

display([model_version.value for model_version in OpenAIModelVersion])

display([model_version.value for model_version in AnthropicModelVersion])Example of to configure a LLM model:

from superwise_api.models.agent.agent import OpenAIModel, OpenAIModelVersion

model = OpenAIModel(version=OpenAIModelVersion.GPT_4, api_token="Open API token")Modify model parameters

In the context of Large Language Models (LLMs), parameters are the adjustable factors that influence how the model generates text and makes decisions based on given inputs.

- Temperature: Controls the randomness of the model’s outputs; lower values produce more deterministic responses, while higher values increase variety.

- OpenAI: The temperature value can range between 0 and 2, with a default value of 0.

- GoogleAI: The temperature value can range between 0 and 1, with a default value of 0.

- Top-p (Nucleus Sampling): Limits the model’s output options to a subset of the highest-probability tokens that collectively account for a probability mass of p, ensuring more coherent text generation.

- The top-p value can range between 0 and 1, with a default value of 1.

- Top-k sampling: Restricts the model to sampling from the top k most probable tokens, reducing the likelihood of selecting less relevant words.

- The top-k value can be any positive integer, with a default value of 40.

- Max tokens: Defines the maximum number of allowed tokens when interacting with the model. If not set explicitly, the default value will be used.

Model parameters availabilityPlease note that parameters are available only on GoogleAI and OpenAI models, and currently, they are accessible exclusively through the SDK. Additionally, the top-k parameter is exclusively available on GoogleAI models.

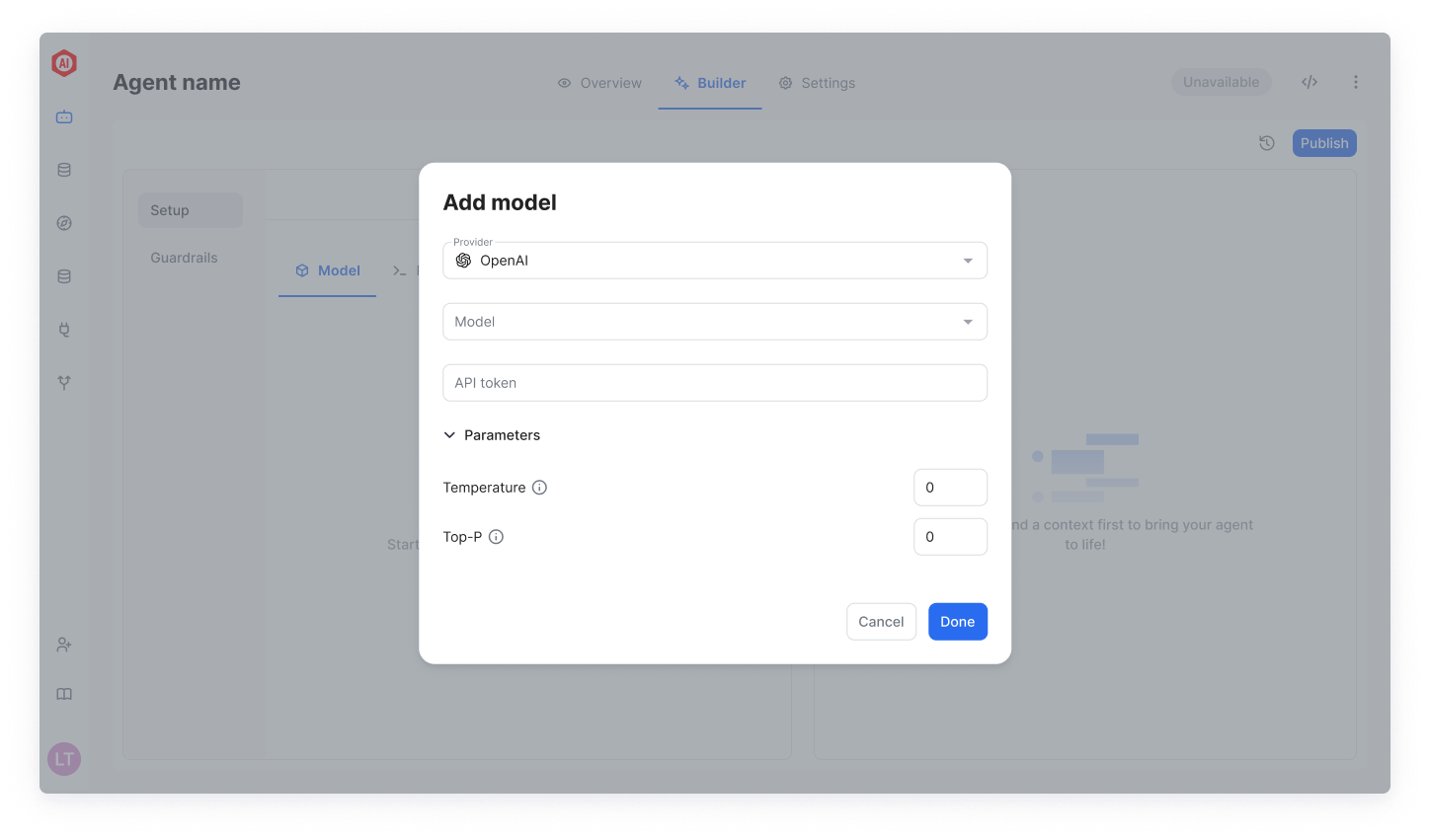

Register a self-hosted Vertex model

SUPERWISE® supports the integration of self-hosted models from the Vertex Model Garden, enabling you to leverage powerful, language foundation models within your agents. To utilize this feature, follow the outlined steps below.

Prerequisites

Before you begin, ensure that you have deployed your desired language foundation model in the Vertex Model Garden. Only language foundation models are supported by SUPERWISE® at this time. For detailed instructions on deploying a model to the Vertex Model Garden, please refer to their documentation.

Pay attentionSUPERWISE® currently supports only language foundation models

Connect the deployed model to your agent

Once your model is successfully deployed in the Vertex Model Garden, you will need to integrate it with your SUPERWISE® agent.

Enter the following required connection details:

- Project ID: Your unique Google Cloud Project identifier.

- Endpoint ID: The specific ID of the deployed model's endpoint.

- Location: The regional location of your deployed model.

- GCP Service Account: The credentials allowing SUPERWISE® to access your hosted model. The service account should contain a

aiplatform.userpermissions.

Click the Test & Save button to ensure that the connection to the self-hosted model has been established successfully. Once the connection is verified, make sure to click on the Publish button, which will apply and visibly reflect the changes within your agent.

Good to knowTo maximize the potential of your private LLM, delve into SUPERWISE®'s strategies for employing the model within the ReAct framework.

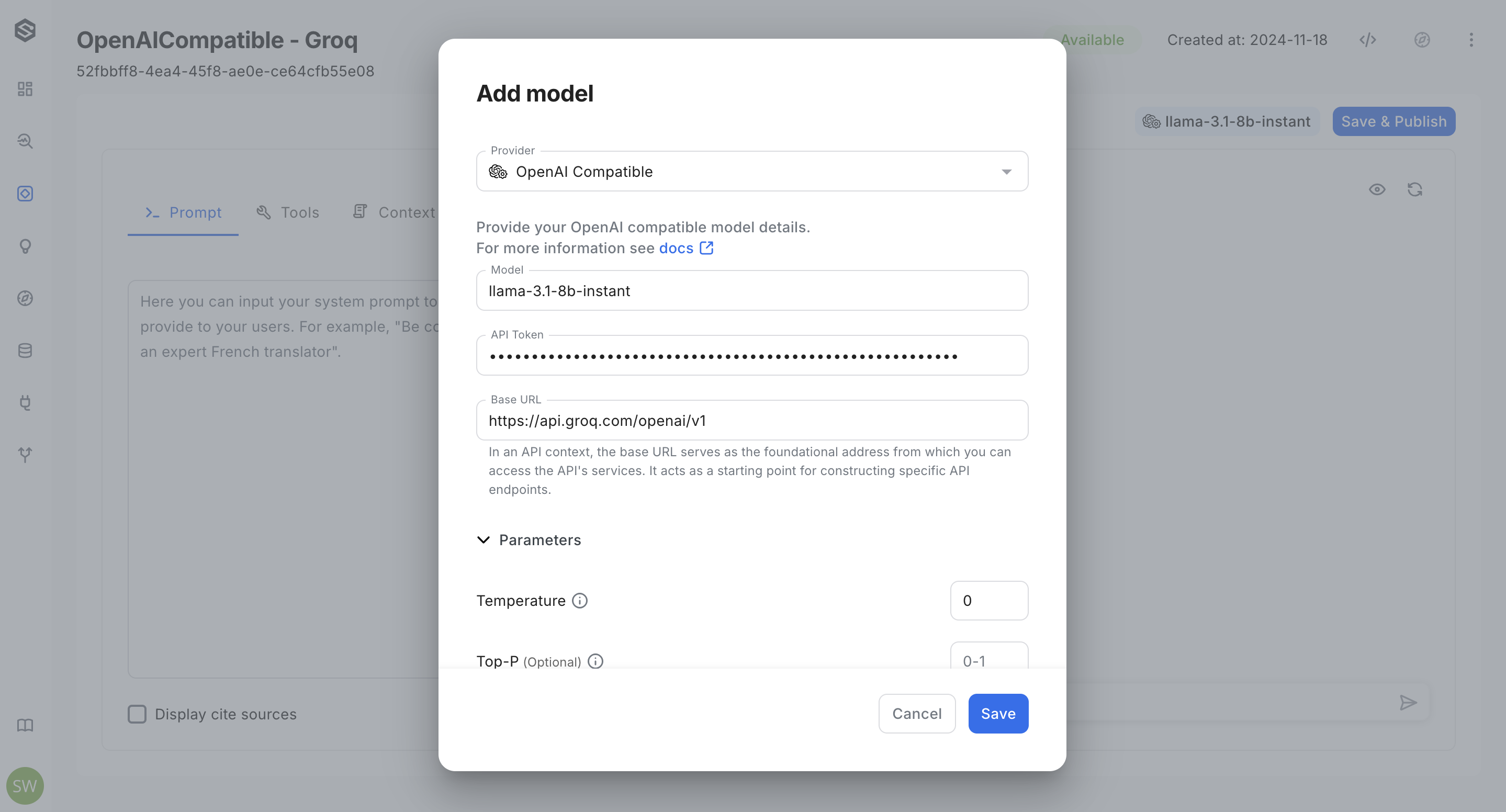

Register OpenAI compatible model

SUPERWISE® supports the integration of models with OpenAI-compatible APIs, allowing you to utilize various model providers and open-source tools that adhere to the OpenAI API schema. Providers and tools that support OpenAI-compatible APIs include Ollama, LlamaFile, vLLM, FastChat, Groq, OpenRouter, Together AI, Vertex AI, and Hugging Face. To leverage this feature, follow the outlined steps below.

Prerequisite

Before you begin, make sure that the provider or tool you are using has an endpoint that exposes an OpenAI-compatible API. This can be tested using OpenAI libraries.

Pay attentionSUPERWISE® currently supports only language foundation models

Experts' RecommendationWe recommend using a 7-billion-parameter model or larger (e.g., Llama 3.1 8B).

Connect an OpenAI compatible model to your agent via the UI

Once you have verified that the model matches the prerequisites, you will need to integrate it with your SUPERWISE® agent.

Enter the following required connection details:

- Model: The model identifier (e.g.,

gpt-4-turbo-2024-04-09). - API Token: The provider's API token.

- Base URL: The OpenAI compatible API endpoint. In an API context, the base URL serves as the foundational address from which you can access the API's services. It acts as a starting point for constructing specific API endpoints (e.g,

https://api.openai.com/v1/).

Click the Test & Save button to ensure that the connection to the model has been established successfully. Once the connection is verified, make sure to click on the Publish button, which will apply and visibly reflect the changes within your agent.

Connect an OpenAI compatible model to your agent via the SDK

Once you have verified that the model matches the prerequisites, you will need to integrate it with your SUPERWISE® SDK. Fields for OpenAI-Compatible Object Model:

- version: The model identifier (e.g.,

gpt-4-turbo-2024-04-09). - api_token: The provider's API token.

- base_url: The OpenAI compatible API endpoint. In an API context, the base URL serves as the foundational address from which you can access the API's services. It acts as a starting point for constructing specific API endpoints (e.g,

https://api.openai.com/v1/).

from superwise_api.models.agent.agent import OpenAICompatibleModel

model = OpenAICompatibleModel(version="gpt-4-turbo-2024-04-09", api_token="Open API token", base_url="https://api.openai.com/v1")Updated about 1 month ago