Create a basic LLM assistant agent

Basic LLM Assistant allow users a streamlined agent that enables direct communication with the LLM without relying on external tools or contextual data. This agent type acts as a straightforward intermediary between the user and the model, improving response times when no additional context is needed.

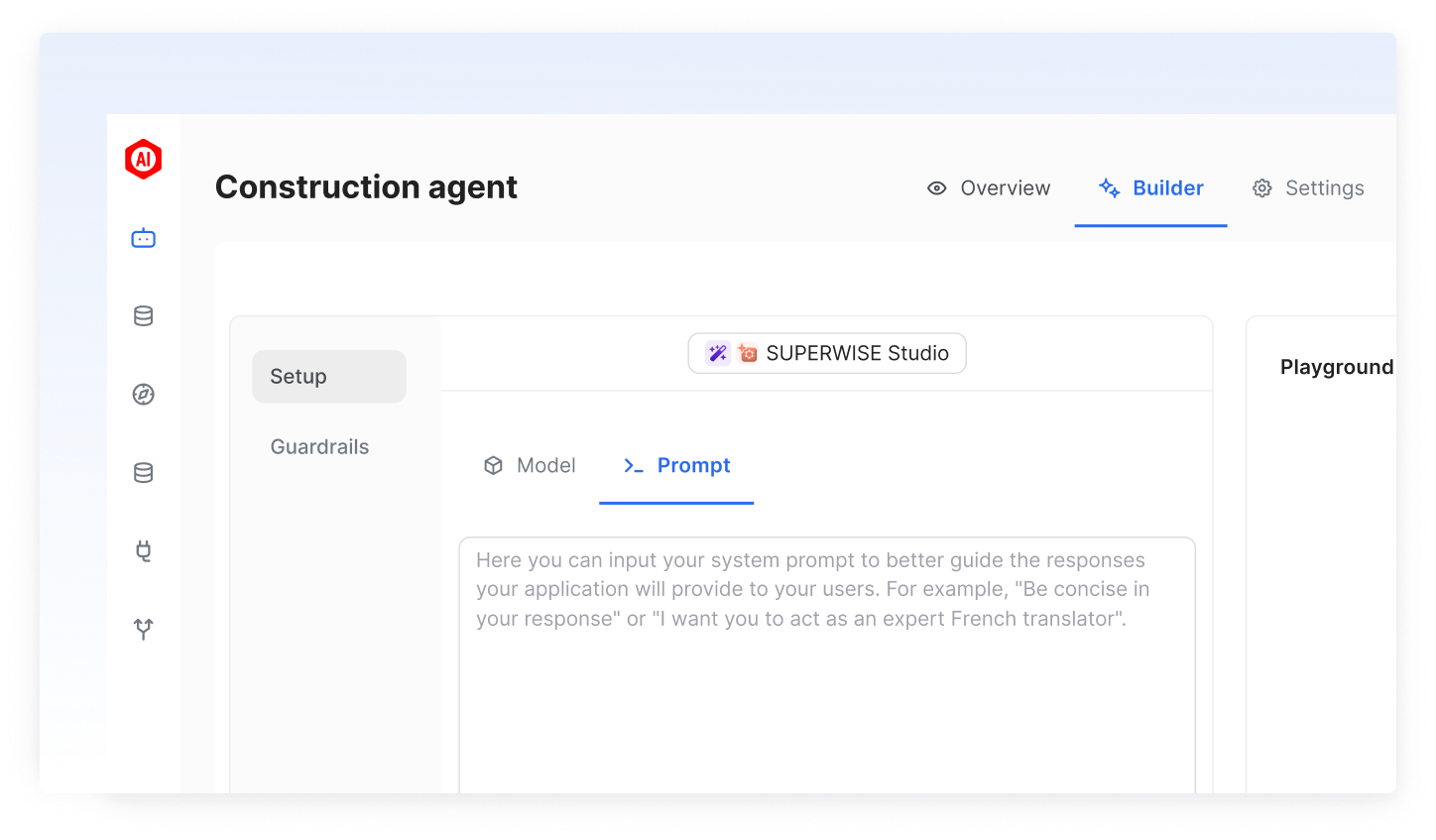

Using the UI

To create a basic LLM assistant agent all you need to do is to provide:

- A model - a relevant LLM model settings that will be used as the primary model

- Prompt - Configure a prompt (optional) and an optional prompt to guide the model to perform the specific required task. Customizing the initial prompt enables the assistant to perform better by providing context and setting the direction of the conversation. You can read more about prompt engineering guidelines here

Good to knowUtilize the playground section to immediately test the chat agent based on your current builder configuration. Playground interactions are neither persisted nor recorded.

Using the SDK

PrerequisitesTo install and properly configure the SUPERWISE® SDK, please visit the SDK guide.

Create agent configuration:

from superwise_api.models.agent.agent import BasicLLMConfig

agent_config = BasicLLMConfig(llm_model=model, prompt=prompt)Updated 4 months ago