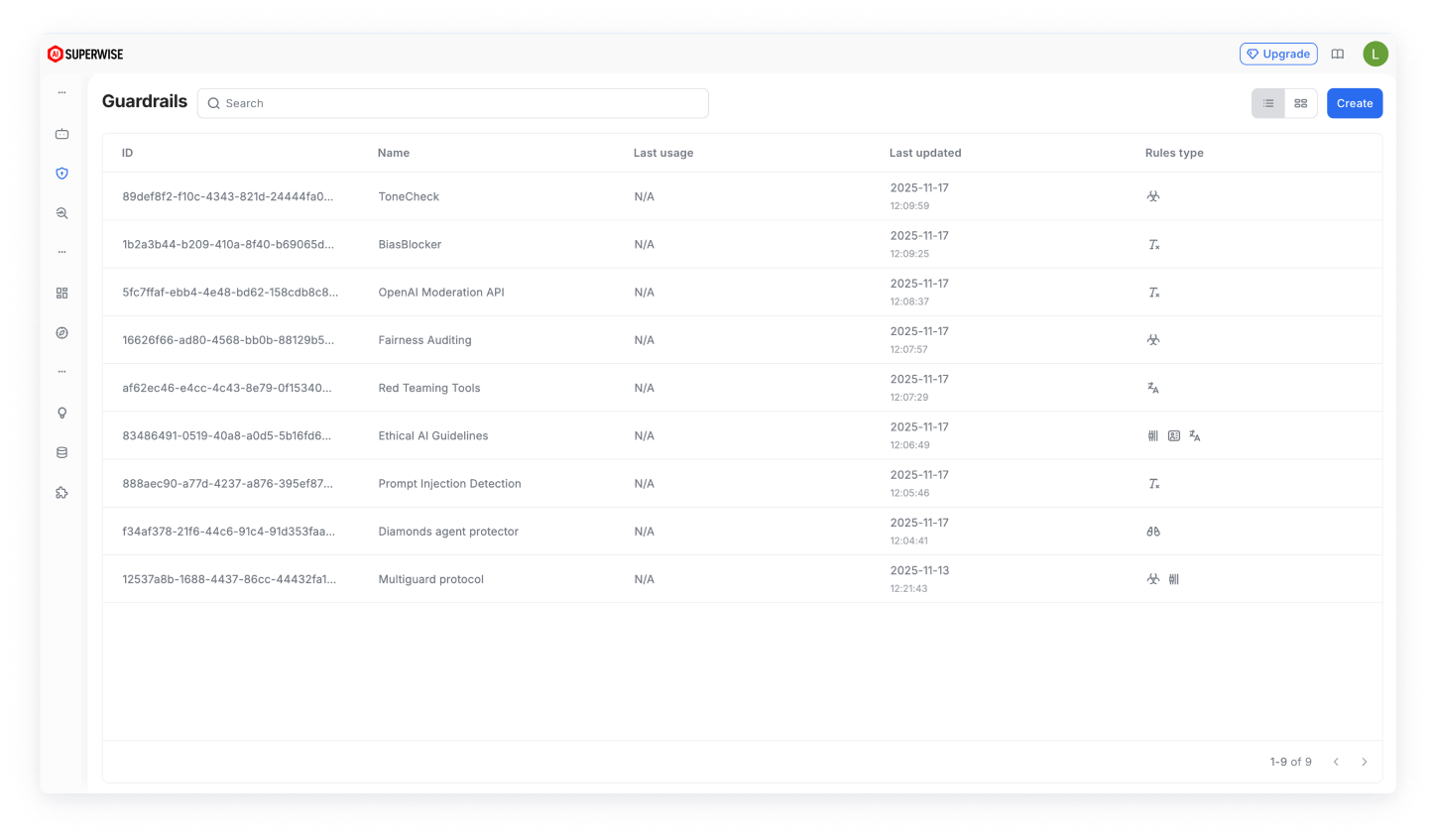

Guardrails

Guardrails are mechanisms designed to ensure that LLMs generate safe, accurate, and appropriate content. They include rules, ethical guidelines, content filters, and moderation to prevent harmful or biased outputs. These safeguards balance the power of LLMs with responsible AI use. Read more about the guardrails concept here.

The SUPERWISE® platform allows users to create simple rule-based guardrail policies that can combine one or more rules to use across their AI operations. With SUPERWISE®, users can easily create such guardrails, manage their version history, test their settings, and gain visibility into their usage. SUPERWISE® guardrails can be used natively when deploying agents or directly through the API or SDK for external-facing AI operations.

Updated 3 months ago