Quickstart

Monitor your first model in 5 minutes

Let's just start monitoring models with Superwise ASAP.

This guide will walk you through the creation and configuration of a new model into the platform and give you model observability in 5 minutes.

Before you begin

- Make sure you have an active Superwise account. If you don't, signup for free at portal.superwise.ai

- Create your Authentication keys

- Install our SDK

End-to-End Notebook example

The following quickstart guide is also available in a runnable notebook, which can be found here

1. Register a new model and its first version

To start working with Superwise SDK, first, import the package and initialize the Superwise object.

import os

from superwise import Superwise

os.environ['SUPERWISE_CLIENT_ID'] = 'REPLACE_WITH_YOUR_CLIENT'

os.environ['SUPERWISE_SECRET'] = 'REPLACE_WITH_YOUR_SECRET'

sw = Superwise()

For on-prem users

initiate

Superwisewith an additional argument -superwise_host(the domain defined in the installation phase of Superwise, for example - “managed.sw-tools.ai”)sw = Superwise(superwise_host=<your internal superwise host>)

First, create a project.

Projects are an isolated group that holds models. You can think about it as a "namespace". A model must have a project, so we will create one now.

from superwise.models.project import Project

project = Project(

name="My First Project",

description="project for my first model"

)

project = sw.project.create(project)

Next, register a new model by defining its name, description, and associated project.

from superwise.models.model import Model

diamond_model = Model(

name="Diamond Model",

description="Regression model that predicts the diamond price",

project_id=project.id

)

diamond_model = sw.model.create(diamond_model)

print(f"New task Created - {diamond_model.id}")

Now that we have a new model in place, we can start and log its first version.

To create a new version, you should provide a baseline dataset (e.g., the version training dataset) so the platform will automatically infer the version schema and baseline distributions that your production data will be compared.

The baseline dataset may contain schema with different entity roles included (visit our data entity guide to see the complete role list).

For quick start, we will use the training dataset as a baseline and save it to a local file

import requests

from io import StringIO

import pandas as pd

url = 'https://gitlab.com/superwise.ai-public/integration/-/raw/main/getting_started/data/baseline.csv?inline=false'

training_data = pd.read_csv(StringIO(requests.get(url).text))

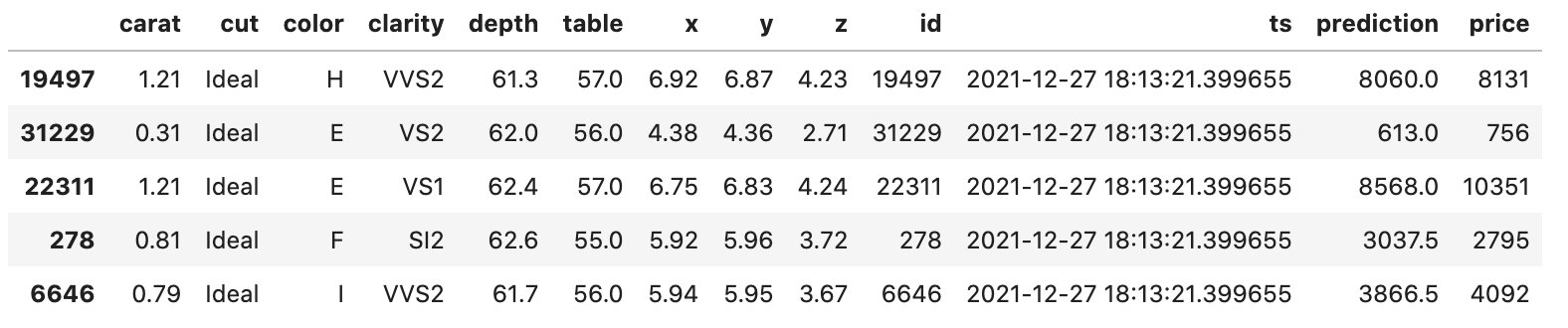

training_data.head()

training_data.to_csv("training.csv", index=False)

File size limitation

The sum of the size of all baseline data files together should be up to 100MB

Supported file types

csv, parquet, csv.gz

After saving the training dataset to a local file, let's add this dataset to Superwise project

from superwise.models.dataset import Dataset

dataset = Dataset(name="Training", files=["training.csv"], project_id=project.id)

dataset = sw.dataset.create(dataset)

Pro tip!

Dataset API can also read files from cloud storage - S3, GCS, Azure Blob Storage,

by using the prefixes: s3://, gs://, abfs:// accordingly.

In those cases, the user should pass auth parameters for each cloud storage.

If not passed, the auth will be inferred from the environment. For reference:

https://sdk.superwise.ai/controller/dataset/

You can now create and activate your version

from superwise.models.version import Version

version = Version(name="1.0.0", model_id=diamond_model.id, dataset_id=dataset.id)

version = sw.version.create(version)

sw.version.activate(version.id)

Now you're good to go and can start logging production data.

Register a model

To read more about advanced options on how to register a new model and log versions visit our Connecting guides section

2. Create Policy

configure and set monitoring policies to automatically detect important issues that might impact your organization or teams.

Use the SDK to retrieve all the policy templates available on the platform

all_templates = sw.policy.get_policy_templates()

print(all_templates)

After you find a template that meets your needs, add a policy based on that template:

from superwise.resources.superwise_enums import NotifyUpon, ScheduleCron

sw.policy.create_policy_from_template(diamond_model.id, 'Label shift', NotifyUpon.detection_and_resolution, [], ScheduleCron.EVERY_DAY_AT_6AM)

to get notifications on detected anomalies, read more about the notification channels

3. Log production data

Use the SDK to create new transactions containing production data you want to log and monitor.

The transaction will contain the model id, the version it refers to, and the records we want to log. The records format should be aligned with the version schema definition.

url = 'https://gitlab.com/superwise.ai-public/integration/-/raw/main/getting_started/data/ongoing_predictions.csv?inline=false'

ongoing_predictions = pd.read_csv(StringIO(requests.get(url).text))

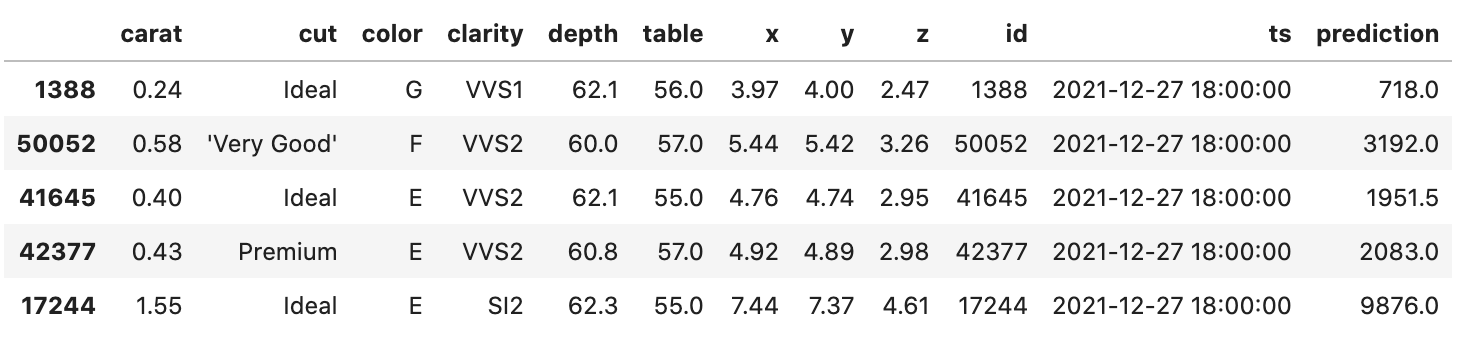

ongoing_predictions.head()

def chunks(lst, n):

"""Yield successive n-sized chunks from lst."""

for i in range(0, len(lst), n):

yield lst[i:i + n]

ongoing_predictions_chunks = chunks(ongoing_predictions.to_dict(orient='records'), 1000)

transaction_ids = list()

for ongoing_predictions_chunk in ongoing_predictions_chunks:

transaction_id = sw.transaction.log_records(

model_id=diamond_model.id,

version_id=version.id,

records=ongoing_predictions_chunk

)

transaction_ids.append(transaction_id)

print(transaction_id)

Production data logging

To further review the different possible methods and formats you can log your production data, visit our Connecting guides section

See, that was simple. ✌️

Now what?

- Investigate your model's metrics in the Metrics screen

- Set up segments

- Create a monitoring policy to automate anomaly detection

Updated over 2 years ago