Baselines

"...many of the truths we cling to depend greatly on our own point of view."

-Return of the Jedi

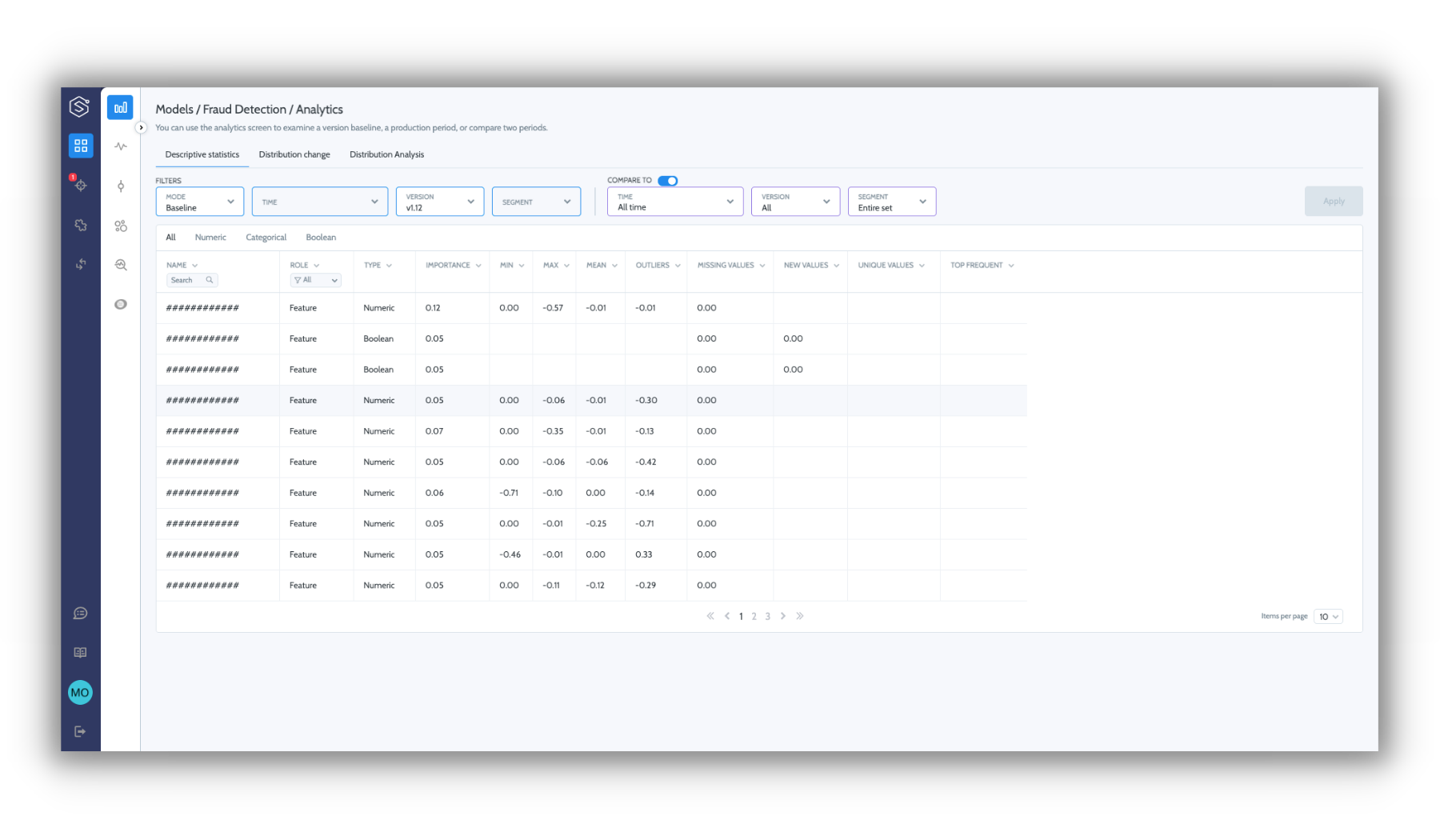

The baseline is a historical reference data set describing a model's behavior and contains the model's inputs, outputs, and labels (optional). Most often, training or validation data sets are initially used as baselines. Within the Superwise platform, you can also select any production timeframe and apply it as a new baseline ad hoc.

Platform calculations such as drifts are based on discrepancies between a model's current data and its baseline. Letting you easily compare production data to a baseline reference.

The platform leverage the baseline to provide three important capabilities:

- Automated schema discovery - Using the provided baseline, the Superwise SDK automates the process of defining the version schema.

- Defining model baseline - Superwise will automatically summarize the statistical properties of your baseline to allow you to compare it with your ongoing production status.

- Overcoming monitoring cold start - Leveraging the baseline, the Superwise anomaly detection engine starts to detect anomalies in your ML process from day one.

What is your right baseline?

There is no clear definition of what makes a good baseline, and it mainly depends on what you would like to compare your production data to. In most cases, the training dataset is a good default answer. It will enable you to analyze production drift and better understand the relevancy of your model (the higher the drift level, the less relevant it is). However, in some cases, mostly in highly imbalanced use cases (like fraud detection), it's common to sample only the major class so that the training dataset will be more balanced. In such cases, we recommend using the test dataset (which is supposed to remain highly imbalanced) instead, as the training dataset has "drifted" by definition and a test dataset will allow us a more clear view of the expected behavior of the model and data distributions.

Updated about 3 years ago