Collecting data from GCS

This guide will cover all the steps required to build a fully integrated pipeline with Superwise using Google cloud storage and Google cloud function.

Steps

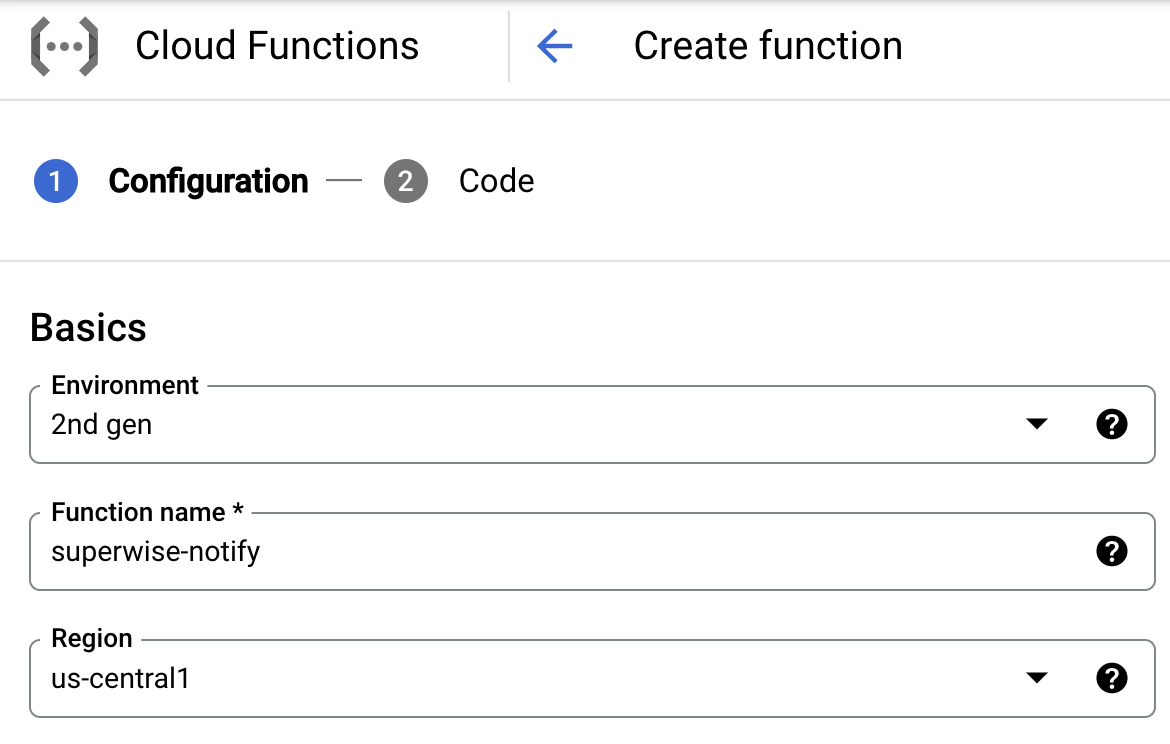

- Create a cloud function

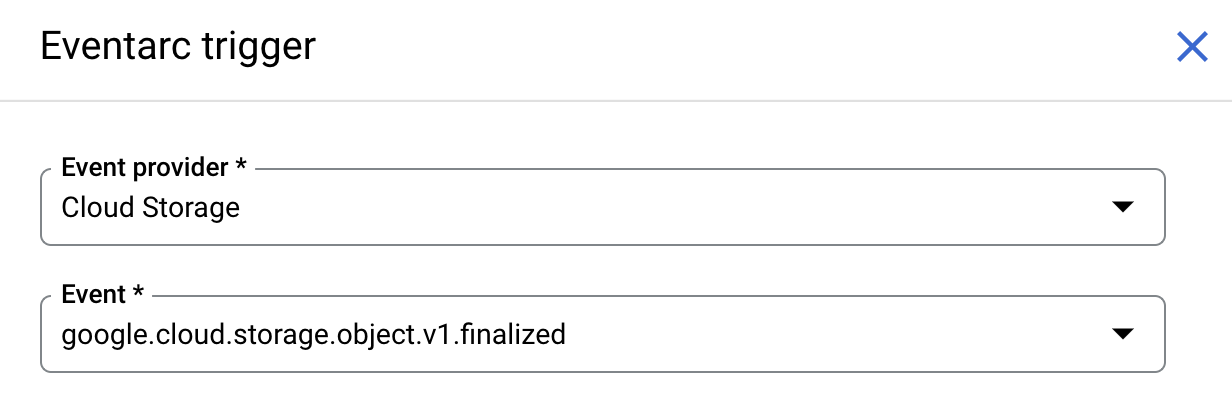

- In order to invoke the cloud function on each new file, Add Eventarc trigger and select the following:

- Event provider - Cloud storage

- Event - google.cloud.storage.object.v1.finalized

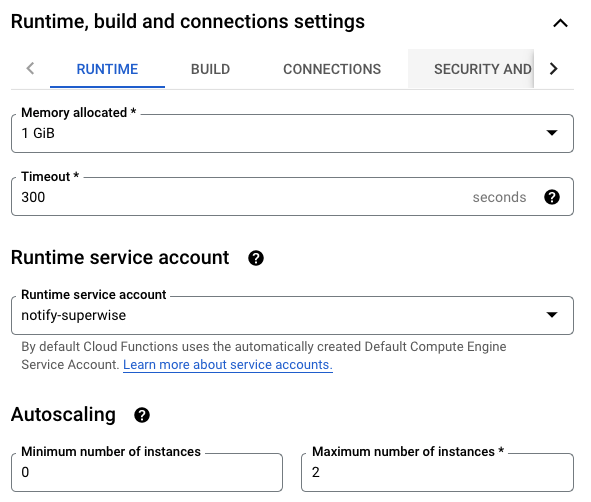

- Runtime, build, and connections settings

a. Increase the allocated memory to 1GiB and The timeout to 300 seconds

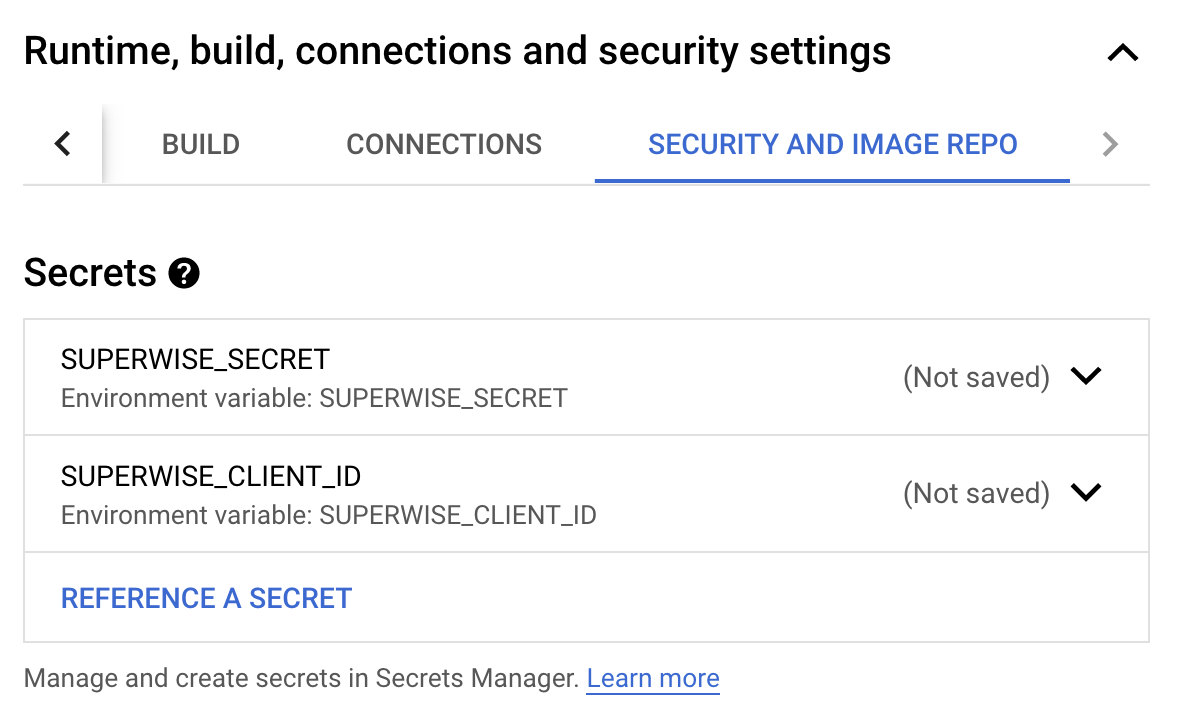

b. Add client id and secret as environment variables (read more about Generating tokens)

- Click next and move to the Code section:

a. Select python 3.10 as runtime for the function

b. Addsuperwisepackage to the requirements.txt file

c. Insert the following code in main.py file

import functions_framework

from superwise import Superwise

sw_client = Superwise()

@functions_framework.cloud_event

def hello_gcs(cloud_event):

data = cloud_event.data

print(f"Event ID: {cloud_event['id']} | Event type: {cloud_event['type']} | Created: {data['timeCreated']}")

t_id = sw_client.transaction.log_from_gcs(

model_id=int(re.findall(r"model_id=(\d+)/", data['name'])[0]),

version_id=int(re.findall(r"version_id=(\d+)/", data['name'])[0]),

file_path=f"gs://{data['bucket']}/{data['name']}"

)

print(t_id)

Folder structure

The code above assumes the following path convention:

gs://<bucket_name>/model_id=<model_id>/version_id=<version_id>/.../file_name.parquet

For on-prem users

- Initiate

Superwisewith an additional argument - superwise_host Python SDK- Please use

log_fileinstead, which supports sending files from your cloud provider's blob storage.In addition, If Superwise is deployed in a VPC and not exposed to the internet, the Cloud Function must be connected to the VPC in order to send data to Superwise.

That's it! Now you are integrated with Superwise!

Updated almost 3 years ago